Memory Management

Process by which OS efficiently handles a computer’s memory hierarchy to allocate space to processes and optimize performance.

What is the need?

- Maximizes memory utilization

- Supports multitasking by allocating and deallocating memory as needed

- Protects memory space of one process from another

Role of OS in memory management

- Memory Allocation: allocates memory to processes as needed

- Memory Protection: protects memory space of one process from another

- Memory Sharing: allows processes to share memory space

- Memory Swapping: swaps data between RAM and disk to free up space

- Memory Mapping: maps memory addresses to physical addresses

- Fragmentation: reduces fragmentation to optimize memory usage

- Optimization: optimizes memory usage to improve performance

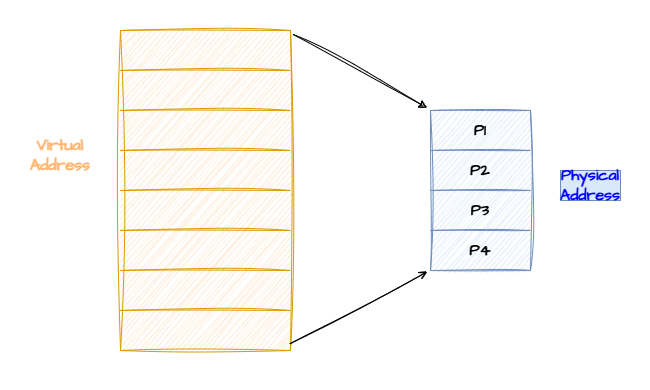

Address Spaces

Logical (Virtual) vs Physical Address Space

Logical addresses are generated by CPU and are used by the OS to reference memory locations. Physical addresses are actual locations in memory. The OS uses the MMU to map logical addresses to physical addresses.

Address Binding

Binding maps logical addresses to physical addresses.

- Compile Time Binding: logical addresses are fixed to physical addresses at compile time, used in systems with no memory relocation.

- Load Time Binding: logical addresses are fixed to physical addresses at load time, allows programs to load into different memory locations.

- Execution Time Binding: binding is done at runtime, allowing processes to move into different memory locations during execution, require the support of MMU.

Memory Allocation

Contiguous Memory Allocation

Memory is allocated in contiguous blocks, which is simple but leads to fragmentation.

- Single Partition Allocation: the entire memory except the area where OS is in is allocated to a single process. Doesn’t allow multitasking. was used in early batch systems.

- Multiple Partition Allocation: memory is divided into multiple partitions fixed or variable in size. Processes are then loaded into these partitions. In fixed-size partitions, it can lead to internal fragmentation, whilst the variable-size partitions can lead to external fragmentation.

Non-Contiguous Memory Allocation

Processes are divided and loaded into memory blocks that don’t have to be contiguous / adjacent. This mostly eliminates fragmentation issues.

- Segmentation: divides memory into segments based on logical divisions of the program like the code, data, stack, heap. Each segment has a base address and limit (size). Still can lead to external fragmentation.

- Paging: divides memory into fixed-size blocks called pages (logical memory) and frames (physical memory). Pages are mapped to frames via a page table. Eliminates external fragmentation but can introduce internal fragmentation due to unused space within a page.

Paging and Segmentation

Paging

Components of Paging:

- Page Table: maps logical pages to physical frames present in memory.

- Page Frame: a fixed-size block of physical memory where a page is loaded.

- Page Table Base Register (PTBR): points to the page table in memory.

- Page Table Length Register (PTLR): indicates the size of the page table.

- Page Size: size of the page in memory.

- Frame Size: size of the frame in memory.

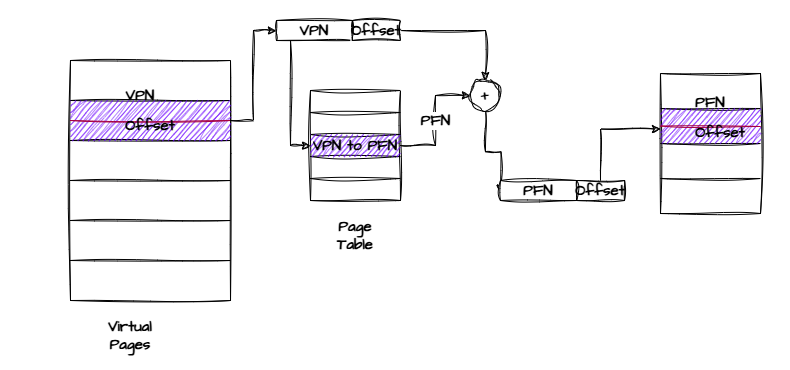

How does paging work?

- The logical address is divided into page number and offset.

- The page number is used to index the page table to get the frame number.

- The frame number and offset are combined to get the physical address.

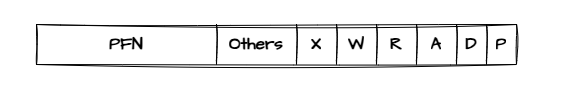

Contents of a Page Table Entry:

Different types of Page Tables

- Flat Page Table: single level table with a single entry for each page. Simple but inefficient for large address spaces.

- Hierarchical Page Table: breaks the page table into multiple levels to reduce the size of the page table and the memory overhead. Each level has a page table that maps to the next level. The top-level page table is stored in the PTBR. The page number is divided into multiple parts to index each level of the page table. The offset is used to access the frame number. This reduces the memory overhead but increases the time to access the page table.

- Inverted Page Table: a single table that maps physical frames to logical pages. Each entry contains the frame number, process ID, and page number. Reduces memory overhead but increases the time to access the page table.

- Hashed Page Table: uses a hash function to map page numbers to frame numbers.

Segmentation

Components of Segmentation:

- Segment Table: stores the base address and size (limit) for each segment.

- Segment Offset: specifies the location within the segment. the offset is added to the base address to get the physical address.

How does segmentation work?

- Memory is divided into Segments based on the logical structure of the program, Segments can be of different sizes.

- We store the segment number and the offset in the segment table.

Comparison: Paging vs. Segmentation

| Feature | Paging | Segmentation |

|---|---|---|

| Division | Fixed-size pages and frames. | Variable-sized segments. |

| Addressing | Page number + Offset. | Segment number + Offset. |

| Table Used | Page Table. | Segment Table. |

| Fragmentation | Internal fragmentation (unused space in pages). | External fragmentation (gaps between segments). |

| Use Case | OS and hardware memory management. | Reflects logical program structure. |

| Flexibility | Less flexible; fixed sizes. | More flexible; aligns with logical structure. |

Virtual Memory

Virtual memory is a memory management technique that creates an illusion of a larger memory space by using a combination of physical memory (RAM) and disk space. It allows processes to use more memory than is physically available.

What’s the need for it?

- Allows programs larger in size than the physical memory to run.

- Improves multitasking by isolating process memory.

- Enhances system performance by reducing physical requirements.

How does it work?

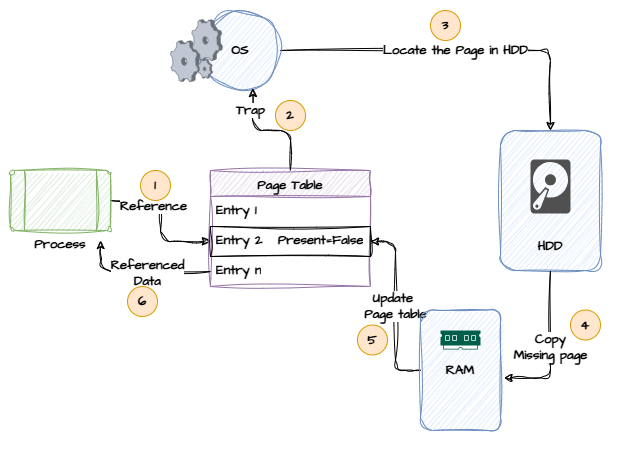

Demand Paging

Pages are loaded into memory only when required. If a program accesses a page that is not in memory, a page fault occurs.

Page fault

The OS pauses the process, loads the required page from the disk into memory, updates the page table, and resumes the process.

Page Replacement Algorithms

When memory is full, the OS must decide which page to replace.

- FIFO: replaces the oldest loaded page. Can lead to poor performance due to the Belady’s Anomaly.

- LRU (Least Recently Used): replaces the page that has not been used for the longest time. Requires more overhead to track page usage.

- LFU (Least Frequently Used): replaces the page that has been used the least number of times.

- Optimal: replaces the page that will not be used for the longest time in the future. Theoretically optimal algorithm with the least amount of page faults but almost impossible to implement practically due to requirement of future knowledge.

- Clock: maintains a use bit for each page, pages with use bit = 0 are replaced.

Thrashing

Occurs when the system spends more time handling page faults than executing processes! Can be caused due to insufficient memory, poor page replacement algorithms, or excessive multitasking.

Working Set Model

Tracks the set of pages actively used by a process over a fixed time window, and ensures processes get enough memory to avoid thrashing.

Memory Allocation Strategies

- First Fit: allocates the first available block that is large enough for the process. Scans memory from the beginning, stops at the first free block of sufficient size. Can lead to fragmentation as small unusable gaps are made.

- Best Fit: allocates the smallest block that is large enough for the process. Scans all the free blocks and chooses the one with the least remaining space. It reduces fragmentation (internal) but is slower due to the searching of all free blocks.

- Worst Fit: allocates the largest free block available. Scans all free blocks and chooses the one with the most space. Leaves larger free blocks for future allocations but can increase fragmentation.

Memory Pools

They are pre-allocated blocks of memory reserved for specific types of objects. This technique is used to speed up memory allocation and deallocation by reusing blocks.

How it works:

- The OS maintains a pool of free memory blocks for specific object types.

- When a process requests memory, it is allocated from the pool.

- When the memory is no longer needed, it is returned to the pool.

Swapping

A process is temporarily moved from RAM to disk (swap space) to free memory for other processes. When needed back, the process is swapped back into memory. OS selects a process for swapping which is IDLE or low priority. Saves the process’s state and loads the required memory pages. Swap space is a reserved area on the disk for swapped out processes.

Spooling

Spooling (Simultaneous Peripheral Operations On-Line) is a technique where data is temporarily held to be used and executed by a device, program, or system. It is commonly used for managing data for printers and other peripheral devices.

How it works:

- Data is sent to a spool, or buffer, where it is held until the device is ready to process it.

- The device processes the data from the spool at its own pace.

Benefits:

- Allows the CPU to continue working on other tasks while the device processes the data.

- Improves system efficiency by managing data flow to slower devices.

Fragmentation

Internal Fragmentation

Occurs when memory is divided into fixed-size blocks and a process is allocated a block larger than its size. The unused space within the block is wasted. Eg. A process needs 7KB but is allocated to a block of 25KB.

Solution would be to use smaller block sizes (e.g. paging) or use dynamic partitioning.

External Fragmentation

When free memory is divided into non-contiguous blocks, making it unusable for large processes that exceed the size of the largest free block.

Solution would be compaction when we rearrange memory to merge free spaces or non-contiguous memory allocation like using paging or segmentation to break processes into smaller units.

Memory Compaction

Used to reduce external fragmentation by moving allocated memory blocks together and leaving a large block of free memory. This helps in allocating larger contiguous memory blocks.

How it works:

- The OS periodically scans memory and moves processes to create a large contiguous block of free memory.

- This process can be time-consuming and may require process suspension.

TLB (Translation Lookaside Buffer)

Role of TLB in Virtual Memory:

TLB is a small, fast cache in the CPU that stores recently used mappings of logical addresses to physical addresses. Without the TLB, every memory access would require a page table lookup in RAM, which is comparatively slower. It is about 100 times faster than RAM. TLB speeds up address translation by quickly providing the physical frame for a given page.

A TLB Hit:

- When a required page-to-frame mapping is found in the TLB, it’s called a hit.

- CPU directly retrieves the physical address, avoiding a slower lookup.

A TLB Miss:

- When the required mapping is not found in the TLB, it’s called a miss.

- OS has to access the page table in RAM to retrieve the mapping which is slower.

- Once the mapping is retrieved, it is stored in the TLB for future use.

Common Performance Metrics

Page Fault Rate: The fraction of memory accesses that result in a page fault. Lower is better. A lower rate means that most pages requested are in memory, while a higher rate indicates poor performance.

Page Fault Rate = (Number of Page Faults) / (Number of Memory Accesses)

Access Time in Paging and TLB: The effective memory access time depends on TLB performance.

- TLB hit: Access Time = TLB Access Time

- TLB miss: Access Time = TLB Access Time + Page Table Access Time

- Effective Access Time: overall average access time, depends on the hit ratio (H) and miss ratio (1-H).

Effective Access Time = H * TLB Access Time + (1-H) * (TLB Access Time + Page Table Access Time)

Kernel Memory Management

Kernel manages memory differently than user processes, as it requires higher performance and needs to allocate/deallocate memory frequently for small objects like PCBs.

Slab Allocation

A technique used to manage memory for objects of the same size.

How it works:

- Memory is divided into slabs, which are collections of blocks of fixed size.

- Each slab is a part of a cache for a specific type of object.

- Objects are pre-allocated, so when the kernel needs memory, it retrieves a free block from the appropriate slab.

Benefits:

- Reduces fragmentation.

- Speeds up memory allocation and deallocation by reusing blocks.

- Ensures memory alignment due to same size.

Buddy System

In this, the memory is divided into blocks of sizes that are in power 2. For eg, 1KB, 2KB, 4KB, 8KB…

How it works:

- Kernel maintains a list of free blocks for each size 2^0, 2^1, 2^2, etc…

- When a process requests memory, the smallest block large enough is allocated.

- If a larger block is split, the “buddies” (halves) are tracked for potential merging when free.

This causes fast allocation and deallocation and merging the buddies reduces the external fragmentation. Internal fragmentation can still occur when block size exceeds process needs.

Copy on Write (COW)

A memory optimization technique used when a process creates a copy of itself, for eg. fork.

How it works:

- Instead of copying the entire memory immediately, both parent and child processes share the same physical memory.

- The memory is marked as read-only.

- When either process tries to write to the memory, a copy is made, and each process gets its own copy of the page.

This reduces memory usage for processes that mostly read shared data and speeds up process creation.

This uses page sharing where pages are shared until a write occurs, and dirty bit which indicates a page has been modified and requires copying.

NUMA (Non-Uniform Memory Access)

NUMA is a memory architecture used in multi-processor systems where memory access time depends on the memory location relative to the processor.

How it works:

- The system is divided into multiple nodes, each containing a processor and memory.

- Memory access is faster within the same node and slower across nodes.

Memory-Mapped Files

Memory-mapped files allow files to be accessed as if they were part of the main memory, providing a convenient way to perform file I/O.

How it works:

- The OS maps a file into the process’s address space.

- The file can be accessed using regular memory operations.

Memory Leaks

Memory leaks occur when a program allocates memory but fails to deallocate it after use, leading to wasted memory resources.

Causes:

- Improper memory management.

- Failure to release memory after use.

Checkpoints

Checkpointing is a technique used to save the state of the system or process to disk. This allows recovery in case of a failure or crash.

External Sort

Is used to sort datasets that are too large to fit into memory, relying on external storage like disk.

When is it needed?

- When the dataset is too large to fit into memory.

- When the dataset is already sorted and needs to be merged.

Process:

-

Sorting Phase: The data is divided into manageable chunks that fit into memory. Each chuck is sorted in memory using an internal sorting alogrithm like quicksort or mergesort. Sorted chunks are also known as runs.

-

Merging Phase: The sorted runs are merged together to produce the final sorted output. This is done by K-way merge where K is the number of runs being merged at a time.

External Sorting in Java

For a small example in Java, we have 3 files.

MyBufferReader.javaExternalSort.javaTest.java

MyBufferReader.java

The MyBufferReader class is a custom buffer reader that reads integers from a temporary file. It implements the Comparable interface to allow comparison between different buffer readers based on their current number.

import java.util.*;

import java.io.*;

public class MyBufferReader implements Comparable<MyBufferReader> {

private final File tempFile;

private Integer currentNumber;

public boolean hasNumber = false;

private BufferedReader br;

public MyBufferReader(File tempFile) throws IOException {

this.tempFile = tempFile;

br = new BufferedReader(new FileReader(this.tempFile));

reload();

}

private void reload() throws IOException {

String s = br.readLine();

if (s == null) {

hasNumber = false;

currentNumber = null;

}

else {

currentNumber = Integer.parseInt(s);

hasNumber = true;

}

}

public void close() throws IOException {

this.br.close();

}

public int peek() {

return currentNumber;

}

public int pop() throws IOException {

int temp = peek();

reload();

return temp;

}

@Override

public int compareTo(MyBufferReader o)

{

return this.currentNumber - o.currentNumber;

}

}

Key Methods

- Constructor: Initializes the buffer reader and reads the first number.

- reload(): Reads the next number from the file.

- close(): Closes the buffer reader.

- peek(): Returns the current number without advancing the reader.

- pop(): Returns the current number and advances the reader.

- compareTo(): Compares the current number with another

MyBufferReader’s current number.

ExternalSort.java

The ExternalSort class handles the external sorting process. It reads the input file, sorts the data in batches, and merges the sorted batches into the final output file.

import java.io.*;

import java.util.*;

public class ExternalSort

{

File inputFile;

public ExternalSort(File inputFile) {

this.inputFile = inputFile;

}

public void sort() throws IOException {

List<File> tempFiles = createSortedTempFiles(10);

PriorityQueue<MyBufferReader> pq = new PriorityQueue<>();

for (File t : tempFiles) {

pq.add(new MyBufferReader(t));

}

File output = new File("./output.txt");

BufferedWriter bw = new BufferedWriter(new FileWriter(output));

while (!pq.isEmpty()) {

MyBufferReader m = pq.poll();

bw.write(m.pop() + "");

bw.newLine();

if (m.hasNumber) {

pq.add(m);

} else {

m.close();

}

}

bw.close();

}

public List<File> createSortedTempFiles(long batchSize) throws IOException {

List<File> tempFiles = new ArrayList<>();

BufferedReader br = new BufferedReader(new FileReader(inputFile));

String number;

List<Integer> numbers = new ArrayList<>();

while (( number = br.readLine()) != null ) {

numbers.add(Integer.parseInt(number));

if (numbers.size() >= batchSize) {

File tempFile = sortAndCreate(numbers);

tempFiles.add(tempFile);

numbers.clear();

}

}

if (!numbers.isEmpty()) {

File tempFile = sortAndCreate(numbers);

tempFiles.add(tempFile);

}

return tempFiles;

}

private File sortAndCreate(List<Integer> numbers) throws IOException {

Collections.sort(numbers);

String fileName = "./" + UUID.randomUUID() + ".txt";

File tempFile = new File(fileName);

tempFile.deleteOnExit();

BufferedWriter bw = new BufferedWriter(new FileWriter(tempFile));

for (int num : numbers) {

bw.write(num + "");

bw.newLine();

}

bw.close();

return tempFile;

}

}

Key Methods

- sort(): Orchestrates the sorting process by creating sorted temporary files and merging them into the final output file.

- createSortedTempFiles(): Reads the input file, sorts the data in batches, and writes each batch to a temporary file.

- sortAndCreate(): Sorts a list of integers and writes them to a temporary file.

Test.java

The Test class contains the main method, which serves as the entry point for the program. It generates a random input file, initializes the ExternalSort class, and starts the sorting process.

import java.util.*;

import java.io.*;

public class Test {

public static void main(String[] args) throws IOException {

File f = new File("input.txt");

BufferedWriter bw = new BufferedWriter(new FileWriter((f)));

Random r = new Random();

for (int i = 0; i < 200; i++) {

bw.write(r.nextInt(200) + "");

bw.newLine();

}

bw.close();

ExternalSort externalSort = new ExternalSort(f);

externalSort.sort();

}

}

Key Steps

- Generate Input File: Creates an input file with 200 random integers.

- Initialize ExternalSort: Creates an instance of

ExternalSortwith the input file. - Start Sorting: Calls the

sort()method to perform the external sorting.