What is a Process?

A process is a program in execution, it is active and has a lifecycle.

A process’s reprentation in memory consists of:

- Text Section: The program’s code also includes the program counter.

- Data Section: Contains global and static variables.

- Heap Section: Contains dynamically allocated memory.

- Stack Section: Contains function calls and local variables.

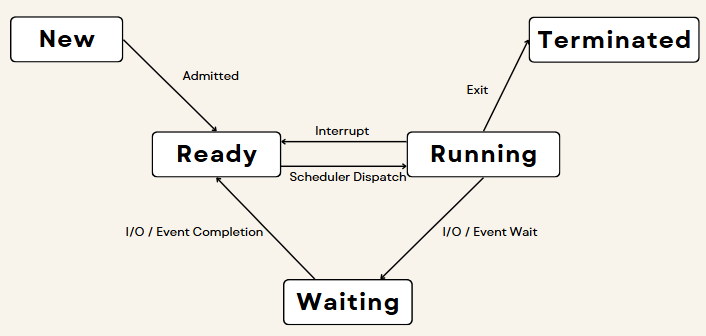

Process States

A process can be in one of the following states:

- New: The process is being created.

- Ready: The process is waiting to be assigned to a processor.

- Running: Instructions are being executed.

- Waiting: The process is waiting for some event to occur.

- Terminated: The process has finished execution.

- Suspended: Temporarily moved to the secondary storage to make space for other processes.

Process transitions:

- Ready -> Running: Scheduled for execution

- Running -> Ready: Time slice ended, process preempted

- Running -> Blocked: Waiting for resources like I/O

- Blocked -> Ready: Resources available

- Running -> Terminated: Process finished execution

- Blocked -> Terminated: Process aborted

Types of Processes

| Process Type | Description |

|---|---|

| User Process | Created by users, runs in user mode. |

| System Process | Created by the operating system, runs in kernel mode. |

| Foreground Process | Interacts with the user. |

| Background Process | Runs in the background. |

| Daemon Process | A background process that runs without user intervention, usually running indefinitely and providing services. |

| Zombie Process | A terminated process whose entry remains in the process table until the parent reads its exit status. |

| Orphan Process | A child process whose parent process has terminated. |

| Init Process | The first process created by the kernel, with process ID 1. |

Process Control Block (PCB)

The PCB is a data structure that contains information about the process:

- Process ID: Unique identifier

- Process State: Current state of the process

- Program Counter: Address of the next instruction

- CPU Registers: Contents of the registers

- CPU Scheduling Information: Priority, time limits

- Memory Management Information: Memory limits, page tables

- Accounting Information: CPU used, time limits

- I/O Status Information: Open files, devices

Functions of PCB:

- Helps in context switching.

- Helps in process scheduling.

- Helps in process synchronization.

Process Operations

Process Creation

Act of creating a new process, usually done by the fork() system call.

Scheduling

The process scheduler selects a process from the ready queue to run on the CPU.

Execution

The process is executed by the CPU. It can be in the running, ready, or blocked state.

Process Termination

The process finishes execution and is removed from the system.

CPU-Bound vs I/O Bound Processes

- CPU-Bound Process: Requires a lot of CPU time, spends more time in the running state.

- I/O-Bound Process: Requires a lot of I/O operations, spends more time in the waiting state.

Process mix -> Combination of CPU-bound and I/O-bound processes.

Process Scheduling and Scheduling Algorithms

Our goal is to maximize CPU utilization and throughput, minimize turnaround time, waiting time, and response time.

Schedulers

-

Long-term Scheduler: Selects processes from the job queue and loads them into memory. i.e brings into the ready queue. Controls the degree of multiprogramming.

-

Short-term Scheduler: Selects processes from the ready queue and assigns them to the CPU. i.e selects the next process to run. (Also called CPU scheduler)

-

Medium-term Scheduler: Swaps processes in and out of memory. It decreases the degree of multiprogramming.

Scheduling Algorithms

- First-Come, First-Served (FCFS): Schedules according to arrival time.

- Shortest Job Next (SJN): Schedules according to burst time.

- Priority Scheduling: Schedules according to priority.

- Round Robin (RR): Schedules according to time slice.

- Multilevel Queue Scheduling: Schedules according to multiple queues.

- Multilevel Feedback Queue Scheduling: Schedules according to multiple queues with feedback.

Context Switching

The process of saving the state of the current process and loading the state of another process.

Steps:

- Save the state of the current process.

- Update the PCB of the current process.

- Move the PCB of the current process to the appropriate queue.

- Select the next process to run.

- Load the state of the next process.

- Update the PCB of the next process.

Interprocess Communication (IPC)

To Enable processes to communicate and synchronize.

Processes are of 2 types:

- Independent Processes: Do not share memory.

- Cooperating Processes: Share memory.

IPC is generally for cooperating processes since they share memory and can be affected by other processes.

Done through:

- Shared Memory: Processes share a portion of memory.

- Message Passing: Processes exchange messages.

IPC Synchronization Mechanisms:

- Mutexes: Lock-based mechanism for exclusive resouce access

- Semaphores: Signaling mechanism to control access to shared resources

- Pipe: Unidirectional or bidirectional communication channel

Threads

It is a single sequence stream within a process. It shares the process’s resources but has its own program counter, stack, and set of registers.

Why do we need threads?

- They run in parallel and improve performance.

- They share resources and communicate efficiently without IPC.

- They are lightweight and have low overhead and have their own Thread control block (TCB).

Components of a Thread

- Program Counter: Contains the address of the next instruction.

- Registers: Used for arithmetic operations.

- Stack Space: Contains local variables and function calls.

- Thread ID: Unique identifier.

- Thread State: Running, ready, blocked.

- Thread Priority: Determines the order of execution.

Thread States

| State | Description |

|---|---|

| New | The thread has been created but has not yet started executing. |

| Runnable | The thread is ready to run and is waiting for CPU time. |

| Blocked | The thread is waiting for an event or resource to become available. |

| Terminated | The thread has finished execution and is no longer active. |

Types of Threads

- User-Level Threads: Managed by the application.

- Kernel-Level Threads: Managed by the OS.

Differences Between User Level Threads and Kernel Level Threads

| Feature | User Level Threads (ULT) | Kernel Level Threads (KLT) |

|---|---|---|

| Management | Managed by user-level libraries | Managed by the operating system |

| Creation and Termination | Faster, as it does not require kernel intervention | Slower, as it involves system calls |

| Context Switching | Faster, as it does not involve the kernel | Slower, as it involves the kernel |

| Scheduling | Handled by the thread library in user space | Handled by the kernel |

| Blocking | If one thread blocks, all threads in the process are blocked | If one thread blocks, other threads can continue execution |

| Portability | More portable, as they are implemented in user space | Less portable, as they are dependent on the operating system |

| Resource Sharing | Shares resources with other threads in the same process | Shares resources with other threads in the same process |

| Kernel Involvement | Kernel is unaware of user-level threads | Kernel is aware and manages kernel-level threads |

| Examples | POSIX Threads (Pthreads) in user space | Windows threads, Linux kernel threads |

What is Multi-threading?

Multi-threading is a programming and execution model that allows multiple threads to exist within the context of a single process. These threads share the process’s resources but execute independently. Multi-threading is used to improve the performance of applications by allowing concurrent execution of tasks, which can lead to better resource utilization and responsiveness.

Key benefits of multi-threading include:

- Parallelism: Threads can run in parallel on multi-core processors, leading to faster execution of tasks.

- Responsiveness: Applications remain responsive by performing time-consuming operations in the background.

- Resource Sharing: Threads within the same process share memory and resources, facilitating efficient communication and data exchange.

- Scalability: Multi-threaded applications can scale better with increasing workloads and hardware capabilities.

Why is Multi-Threading faster?

While the computer system’s processor only carries out one instruction at a time when multithreading is used, various threads from several applications are carried out so quickly that it appears as though the programs are running simultaneously.

Thread vs Process

| Feature | Thread | Process |

|---|---|---|

| Definition | The smallest unit of execution within a process. | An independent program in execution. |

| Creation | Faster, as it involves fewer resources. | Slower, as it involves more resources. |

| Memory Sharing | Shares memory and resources with other threads in the same process. | Has its own memory space and resources. |

| Communication | Easier and faster, as threads share the same address space. | More complex, requires Inter-Process Communication (IPC) mechanisms. |

| Overhead | Lower, as threads are lightweight. | Higher, as processes are heavier. |

| Context Switching | Faster, as it involves switching within the same process. | Slower, as it involves switching between different processes. |

| Dependency | Threads within the same process are dependent on each other. | Processes are independent of each other. |

| Crash Impact | If a thread crashes, it may affect the entire process. | If a process crashes, it does not affect other processes. |

| Examples | A web browser with multiple tabs (each tab is a thread). | Running multiple instances of a web browser (each instance is a process). |

Race Condition

In a multi-threaded environment, threads often share resources such as variables, data structures, or files. When multiple threads try to read and write to these shared resources without proper synchronization, a race condition can occur. The threads “race” to access the resource, and the final state of the resource depends on the timing of their execution, which can vary each time the program runs.

Example of a Race Condition in Java

public class BankAccount {

private int balance = 0; // Shared balance variable

public void deposit(int amount) {

int newBalance = balance + amount; // Step 1: Read balance

balance = newBalance; // Step 2: Update balance

}

public int getBalance() {

return balance;

}

public static void main(String[] args) {

BankAccount account = new BankAccount();

Thread person1 = new Thread(() -> {

for (int i = 0; i < 1000; i++) {

account.deposit(1); // Each deposit increases balance by 1

}

});

Thread person2 = new Thread(() -> {

for (int i = 0; i < 1000; i++) {

account.deposit(1);

}

});

person1.start();

person2.start();

try {

person1.join();

person2.join();

} catch (InterruptedException e) {

e.printStackTrace();

}

System.out.println("Final Account Balance: " + account.getBalance());

}

}

In this example, we simulate a race condition with two threads (person1 and person2) that deposit money into a shared bank account. Each thread deposits 1 unit of money 1000 times, so we expect the final balance to be 2000. However, due to the lack of synchronization, the deposit() method allows both threads to read and write to balance simultaneously, leading to incorrect final results on a few instances.

Why It’s a Race Condition

- The

deposit()method is not synchronized, so both threads can read thebalancevariable at the same time. - If both threads read the balance simultaneously, they may calculate and write back the same

newBalance, resulting in missed increments and an incorrect final balance.

Solution: Synchronize Access to balance

To fix this, we can add synchronization to ensure that only one thread can execute the deposit() method at a time, making the updates to balance thread-safe.

This can be done by adding synchronized to the deposit() method, or by using a ReentrantLock to control access to the shared resource, or by using atomic operations like AtomicInteger.

Locks - Mutex and Critical Section

Mutex (Mutual Exclusion):

- A mutex is a synchronization primitive that ensures exclusive access to a shared resource.

- Only one thread can hold the mutex at a time, preventing other threads from accessing the resource simultaneously.

Critical Section:

- A critical section is a part of the code that accesses shared resources and must not be executed by more than one thread at a time.

- By using a mutex, we can protect the critical section, ensuring that only one thread can execute it at a time.

Example in Java:

public class BankAccount {

private int balance = 0;

private final Object lock = new Object(); // Mutex

public void deposit(int amount) {

synchronized (lock) { // Critical section

balance += amount;

}

}

public int getBalance() {

return balance;

}

}

In this example, the synchronized block ensures that only one thread can execute the deposit method at a time, protecting the critical section and preventing race conditions.